Affective Computing

“Artificial Intelligence (AI) is the part of computer science concerned with designing intelligent computer systems, that is, systems that exhibit the characteristics we associate with intelligence in human behavior — understanding language, learning, reasoning, solving problems, and so on.”

“Artificial Intelligence (AI) is the part of computer science concerned with designing intelligent computer systems, that is, systems that exhibit the characteristics we associate with intelligence in human behavior — understanding language, learning, reasoning, solving problems, and so on.”— Handbook of Artificial Intelligence, vol. I, p. 3

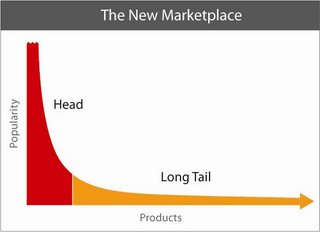

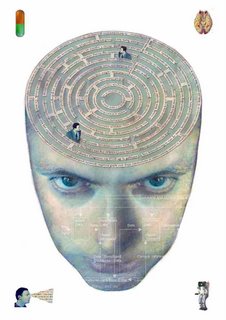

The ultimate goal of AI is to equal or surpass human intelligence by simulating the “highest” human faculties of language, discursive reason, mathematics and abstract problem solving. Picard suggests that computers will need emotions to be truly intelligent, and in particular to interact intelligently with humans. But will AI technology actually advance to recognize and express human emotions? Traditional AI rests on the premise of using algorithmic logic to obtain machine intelligence. However, emerging research in the field of affective computing strives to integrate computer theory with emotions.

For example, Javier Movellan's neurocomputing research can now identify hundreds of ways faces show joy, anger, sadness and other emotions. The computers, which operate by recognizing patterns learned from a multitude of images, eventually will be able to detect millions of expressions. Artificial Neural Networks (ANN) are an information processing paradigm inspired by the way biological nervous systems, such as the brain, process information. Experiments with ANN models have demonstrated an ability to learn such skills as facial recognition, reading, and the detection of simple grammatical structure.

With the prevalence of embedded software agents such as smart agents, Web robots, bots and other collaborative agents that are routinely used to manage workflow, networks, information retrieval, e-commerce and a host of other complex services, the perception of machine intelligence is shifting. Technology may never replicate human emotional experience but there is the possibility that modest advances in human-scale affective artificial intelligence can transform applications from merely mathematical processing units into assistive and perceptive entities.